How to save P1 reader data with Smartmeter2mqtt and n8n to QuestDB

Introduction

This post explains creating an n8n flow to receive parsed P1 reader data from Smartmeter2mqtt and save it to QuestDB. Ultimately, QuestDB can be used as a data source for tools like Grafana or Budibase.

Requirements

Ensure the following docker containers are running and configured:

- QuestDB

- n8n

- Smartmeter2mqtt - you may need to add or change the url of the n8n webhook node after the n8n flow has been created

How To

Create n8n flow

We will create a flow in n8n that will:

- Create tables in QuestDB

- Process data from Smartmeter2mqtt sent to the n8n flow via webhook

- Schedule data retention for each QuestDB table using a cron node

-

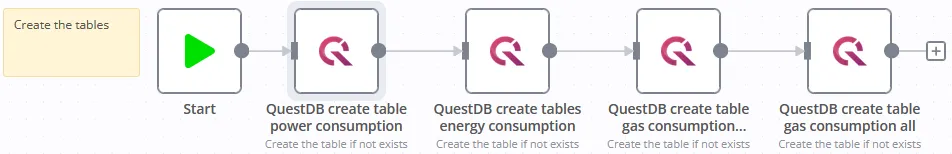

Create the tables

smarthome_power_consumptionPower is a measure of electricity at a single point of time. Data retention is 30 dayssmarthome_energy_consumptionElectrical Energy is a unit of measurement over time (historical). Usually expressed in kilowatt hours (kWh). Data retention is 5 yearssmarthome_gas_consumption_allGas consumption all (approx. every 5 min.). Data retention is 30 dayssmarthome_gas_consumption_historicalGas consumption historical (every hour). Data retention is 5 years

The tables were created with the following SQL queries:

CREATE TABLE IF NOT EXISTS smarthome_power_consumption(powerTs TIMESTAMP, addedTs TIMESTAMP, currentTarrif INT, currentUsage FLOAT, currentDelivery FLOAT, calculatedUsage INT) TIMESTAMP(powerTs) PARTITION BY DAY;CREATE TABLE IF NOT EXISTS smarthome_energy_consumption(powerTs TIMESTAMP, addedTs TIMESTAMP, currentTarrif INT, totalT1Use FLOAT, totalT1Delivered FLOAT, totalT2Use FLOAT, totalT2Delivered FLOAT) TIMESTAMP(powerTs) PARTITION BY MONTH;CREATE TABLE IF NOT EXISTS smarthome_gas_consumption_historical(gasTs TIMESTAMP, addedTs TIMESTAMP, gas_totalUse FLOAT) TIMESTAMP(gasTs) PARTITION BY MONTH;CREATE TABLE IF NOT EXISTS smarthome_gas_consumption_all(gasTs TIMESTAMP, addedTs TIMESTAMP, gas_totalUse FLOAT) TIMESTAMP(gasTs) PARTITION BY DAY;As you can see the timestamp (

powerTsorgasTs) of the measured value(s) is used as designated timestamp which enables partitioning tables by time. You can of course execute the SQL queries in the web console of QuestDB but I choose to execute the queries once with the n8n QuestDB nodes and save the queries in the n8n flow:

-

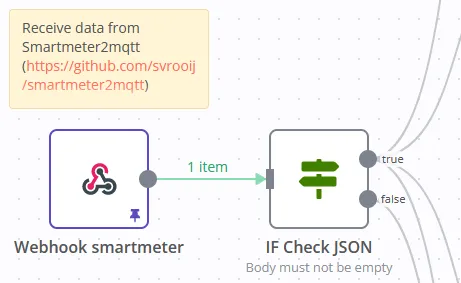

Process the data from Smartmeter2mqtt that is sent to the n8n flow using a webhook

The webrequest output of Smartmeter2mqtt will send the data (message) every 30 seconds to the webhook of the flow. When starting the n8n and Smartmeter2mqtt docker containers, empty messages can be sent to the webhook so the first step is to check whether the JSON body of the message contains fields (i.e. whether it is not empty):

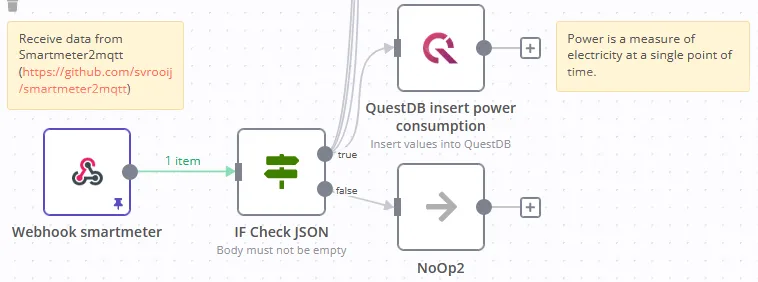

The expression that checks if the

crcfield exists in the JSON body:

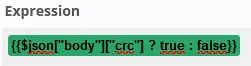

Then insert the power consumption. Because the insert operation of the QuestDB node didn’t work quite right I used the execute query operation and it works fine:

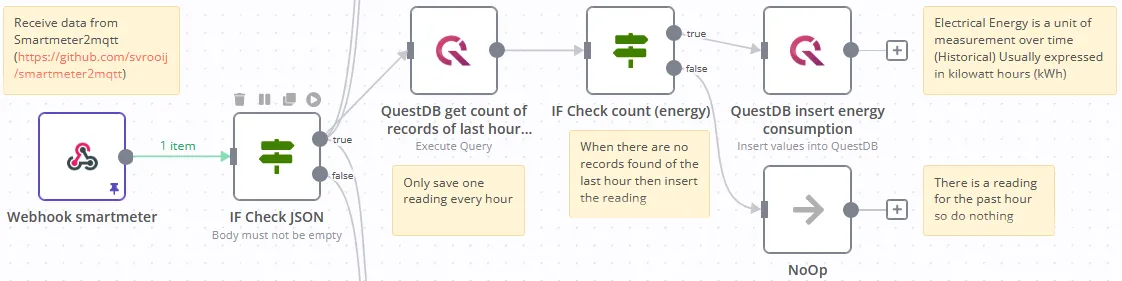

And then the energy consumption. Since we use this table for historical data, we store the value once an hour. This is checked using a query that returns a

count():

As an example the query with the

count():SELECT count() FROM (SELECT * FROM 'smarthome_energy_consumption' ORDER BY addedTs DESC LIMIT 1) WHERE powerTs > dateadd('h', -1, to_timezone(now(), 'Europe/Amsterdam'));As you can see, the last record is requested and the

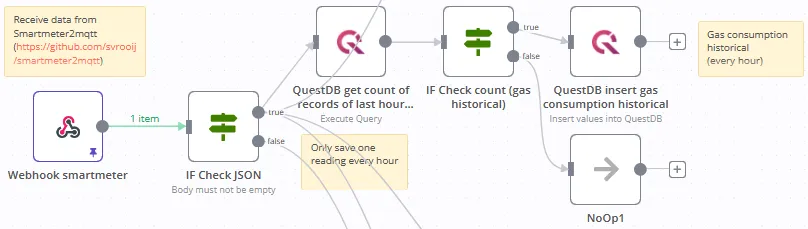

powerTstimestamp is compared with the current date and time.Then the historical gas consumption whose data is also saved once an hour:

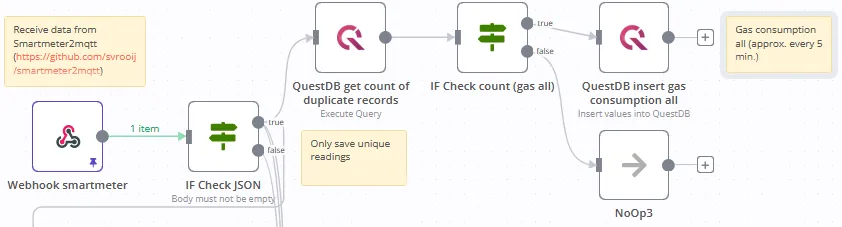

And finally all gas consumption. Since the gas consumption data only can change every five minutes, unlike the power consumption which changes every 30 seconds, I use a query to check whether the data contains a unique value. In this way, the data is only written if it is unique:

SELECT count() FROM (SELECT * FROM 'smarthome_gas_consumption_all' ORDER BY addedTs DESC LIMIT 1) WHERE gas_totalUse = CAST({{$node["Webhook smartmeter"].json["body"]["gas_totalUse"]}} AS FLOAT);

SELECT count() FROM (SELECT * FROM 'smarthome_gas_consumption_all' ORDER BY addedTs DESC LIMIT 1) WHERE gas_totalUse = CAST({{$node["Webhook smartmeter"].json["body"]["gas_totalUse"]}} AS FLOAT);This query compares the

gas_totalUseof the last row with the same field from the message received via the webhook. Remember that you have to convert the value withCASTto a float data type, otherwise the comparison will not work properly. -

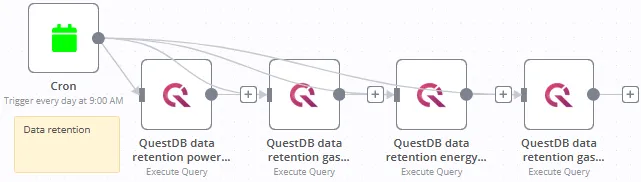

Schedule the data retention for each QuestDB table using a cron node

Now that the data is flowing in and is being stored in the tables, I’ve also added a

crontrigger that will delete old data at 9am every day. This ensures that:- the data from the

smarthome_power_consumptionandsmarthome_gas_consumption_alltables is stored for a maximum of 30 days - and the data from the

smarthome_energy_consumptionandsmarthome_gas_consumption_historicaltables is stored for a maximum of 5 years

For example, the SQL query for the power consumption table looks like this:

ALTER TABLE 'smarthome_power_consumption' DROP PARTITION WHERE powerTs < dateadd('d', -30, to_timezone(now(), 'Europe/Amsterdam'));With the

dateaddfunction you can set exactly how you want the data retetion to take place. - the data from the

n8n JSON flow (optional)

Below is the complete flow in JSON format. Make sure you have added a QuestDB account to the n8n credentials first:

Host: the IP address of the host QuestDB is running on

The following settings are correctly configured by default, but you can change them if desired:

Database:qdbUser:adminPassword:questSSL:DisablePort:8812(this is the PostgreSQL port and you must have set it up with your QuestDB Docker container)

And here we go with the full flow:

{ "name": "Smartmeter", "nodes": [ { "parameters": {}, "name": "Start", "type": "n8n-nodes-base.start", "typeVersion": 1, "position": [ -2240, -500 ], "id": "fd43675f-cda4-4ab4-bf82-b9e4c81eb54c" }, { "parameters": { "httpMethod": "POST", "path": "smartmeter", "options": {} }, "name": "Webhook smartmeter", "type": "n8n-nodes-base.webhook", "typeVersion": 1, "position": [ -2240, 360 ], "webhookId": "426ab664-c9c7-47ff-8fa5-f0cf81255f2a", "id": "15e74def-8a15-4479-8f2c-26094576fa6c", "notes": "Test" }, { "parameters": { "content": "Gas consumption historical \n (every hour)", "height": 80, "width": 150 }, "id": "bdf2d456-359c-460f-8c42-8e91e9140cc0", "name": "Note", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1100, 140 ] }, { "parameters": { "content": "Electrical Energy is a unit of measurement over time (Historical) Usually expressed in kilowatt hours (kWh)", "height": 100, "width": 220 }, "id": "341611cd-3406-4653-aa24-e9336e5e2e1a", "name": "Note1", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1100, 580 ] }, { "parameters": { "content": "Power is a measure of electricity at a single point of time.", "height": 80, "width": 220 }, "id": "ea7bdc20-414d-49cf-baff-0b221b166034", "name": "Note2", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1540, 960 ] }, { "parameters": { "operation": "executeQuery", "query": "=INSERT INTO 'smarthome_power_consumption' VALUES('{{$json[\"body\"][\"powerTs\"]}}',to_timezone(now(), 'Europe/Amsterdam'),{{$json[\"body\"][\"currentTarrif\"]}}, {{$json[\"body\"][\"currentUsage\"]}},{{$json[\"body\"][\"currentDelivery\"]}},{{$json[\"body\"][\"calculatedUsage\"]}});", "additionalFields": {} }, "id": "7cf56a83-8769-4451-befc-741e55e7dea4", "name": "QuestDB insert power consumption", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1740, 940 ], "notesInFlow": true, "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } }, "notes": "Insert values into QuestDB" }, { "parameters": { "operation": "executeQuery", "query": "=INSERT INTO 'smarthome_energy_consumption' VALUES('{{$node[\"Webhook smartmeter\"].json[\"body\"][\"powerTs\"]}}',to_timezone(now(), 'Europe/Amsterdam'),{{$node[\"Webhook smartmeter\"].json[\"body\"][\"currentTarrif\"]}},{{$node[\"Webhook smartmeter\"].json[\"body\"][\"totalT1Use\"]}},{{$node[\"Webhook smartmeter\"].json[\"body\"][\"totalT1Delivered\"]}},{{$node[\"Webhook smartmeter\"].json[\"body\"][\"totalT2Use\"]}},{{$node[\"Webhook smartmeter\"].json[\"body\"][\"totalT2Delivered\"]}});", "additionalFields": {} }, "id": "ad62fd9a-ba11-4451-9f50-e4c49f3f1490", "name": "QuestDB insert energy consumption", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1300, 580 ], "notesInFlow": true, "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } }, "notes": "Insert values into QuestDB" }, { "parameters": {}, "id": "3e803f81-b129-4abe-8c3d-14ac4292a22c", "name": "NoOp", "type": "n8n-nodes-base.noOp", "typeVersion": 1, "position": [ -1300, 780 ] }, { "parameters": { "content": "When there are no records found of the last hour then insert the reading", "height": 80, "width": 220 }, "id": "63526fb7-8023-4e7a-91c8-caff1b5b8e09", "name": "Note3", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1580, 760 ] }, { "parameters": { "content": "There is a reading for the past hour so do nothing", "height": 80, "width": 150 }, "id": "b325302f-4727-488f-a021-22400a704328", "name": "Note4", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1100, 780 ] }, { "parameters": { "content": "Only save one reading every hour", "height": 80, "width": 150 }, "id": "1ef246a6-4944-4f87-ba76-d01fb35468a9", "name": "Note5", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1760, 760 ] }, { "parameters": { "operation": "executeQuery", "query": "CREATE TABLE IF NOT EXISTS smarthome_energy_consumption(powerTs TIMESTAMP, addedTs TIMESTAMP, currentTarrif INT, totalT1Use FLOAT, totalT1Delivered FLOAT, totalT2Use FLOAT, totalT2Delivered FLOAT) TIMESTAMP(powerTs) PARTITION BY MONTH;", "additionalFields": {} }, "name": "QuestDB create tables energy consumption", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1880, -500 ], "notesInFlow": true, "id": "b199c7af-315e-423c-8d3c-6f19037abf70", "executeOnce": false, "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } }, "notes": "Create the table if not exists" }, { "parameters": { "operation": "executeQuery", "query": "CREATE TABLE IF NOT EXISTS smarthome_power_consumption(powerTs TIMESTAMP, addedTs TIMESTAMP, currentTarrif INT, currentUsage FLOAT, currentDelivery FLOAT, calculatedUsage INT) TIMESTAMP(powerTs) PARTITION BY DAY;", "additionalFields": {} }, "name": "QuestDB create table power consumption", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -2080, -500 ], "notesInFlow": true, "id": "57ffe500-eb73-4250-971b-2112528db92f", "executeOnce": false, "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } }, "notes": "Create the table if not exists" }, { "parameters": {}, "id": "4da11db2-14cb-4ad8-aa16-4128d2de68f0", "name": "NoOp1", "type": "n8n-nodes-base.noOp", "typeVersion": 1, "position": [ -1300, 320 ] }, { "parameters": { "content": "Only save unique readings", "height": 80, "width": 150 }, "id": "36ca0f79-e183-4a21-9299-caca54c1222d", "name": "Note6", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1760, -60 ] }, { "parameters": {}, "id": "95591378-b4e4-46a5-9455-d1c63d948137", "name": "NoOp2", "type": "n8n-nodes-base.noOp", "typeVersion": 1, "position": [ -1740, 1160 ] }, { "parameters": { "conditions": { "boolean": [ { "value1": "={{$json[\"body\"][\"crc\"] ? true : false}}", "value2": true } ] } }, "id": "6d2e4187-634c-41ab-af32-be443e9ab491", "name": "IF Check JSON", "type": "n8n-nodes-base.if", "typeVersion": 1, "position": [ -2020, 360 ], "notesInFlow": true, "notes": "Body must not be empty" }, { "parameters": { "conditions": { "string": [ { "value1": "={{ $json[\"count\"] }}", "value2": "0" } ] }, "combineOperation": "any" }, "id": "c04f16e3-c959-40d4-b10a-5a1d2139fe6d", "name": "IF Check count (energy)", "type": "n8n-nodes-base.if", "typeVersion": 1, "position": [ -1520, 580 ] }, { "parameters": { "operation": "executeQuery", "query": "=SELECT count() FROM (SELECT * FROM 'smarthome_energy_consumption' ORDER BY addedTs DESC LIMIT 1) WHERE powerTs > dateadd('h', -1, to_timezone(now(), 'Europe/Amsterdam'));", "additionalFields": {} }, "id": "53a50cc7-d73f-45e1-aebe-7e24dd309367", "name": "QuestDB get count of records of last hour (energy)", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1740, 580 ], "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } } }, { "parameters": { "content": "Gas consumption all (approx. every 5 min.)", "height": 80, "width": 150 }, "id": "95cd1671-ce7b-49fc-81c8-81d082fd3dff", "name": "Note7", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1120, -220 ] }, { "parameters": {}, "id": "ec735f9d-7c1a-4675-8668-ae227aab1d6f", "name": "NoOp3", "type": "n8n-nodes-base.noOp", "typeVersion": 1, "position": [ -1300, -40 ] }, { "parameters": { "operation": "executeQuery", "query": "=SELECT count() FROM (SELECT * FROM 'smarthome_gas_consumption_all' ORDER BY addedTs DESC LIMIT 1)\n WHERE gas_totalUse = CAST({{$node[\"Webhook smartmeter\"].json[\"body\"][\"gas_totalUse\"]}} AS FLOAT);", "additionalFields": {} }, "id": "3d42bd00-0b3f-4e68-a333-98a143477761", "name": "QuestDB get count of duplicate records", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1740, -240 ], "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } } }, { "parameters": { "operation": "executeQuery", "query": "CREATE TABLE IF NOT EXISTS smarthome_gas_consumption_all(gasTs TIMESTAMP, addedTs TIMESTAMP, gas_totalUse FLOAT) TIMESTAMP(gasTs) PARTITION BY DAY;", "additionalFields": {} }, "name": "QuestDB create table gas consumption all", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1480, -500 ], "notesInFlow": true, "id": "b5919d1e-ae96-4e82-bce7-e73d609fd63c", "executeOnce": false, "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } }, "notes": "Create the table if not exists" }, { "parameters": { "operation": "executeQuery", "query": "CREATE TABLE IF NOT EXISTS smarthome_gas_consumption_historical(gasTs TIMESTAMP, addedTs TIMESTAMP, gas_totalUse FLOAT) TIMESTAMP(gasTs) PARTITION BY MONTH;", "additionalFields": {} }, "name": "QuestDB create table gas consumption historical", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1680, -500 ], "notesInFlow": true, "id": "bbd37a40-3397-4efc-ab9c-2557a30f3490", "executeOnce": false, "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } }, "notes": "Create the table if not exists" }, { "parameters": { "conditions": { "string": [ { "value1": "={{ $json[\"count\"] }}", "value2": "0" } ] }, "combineOperation": "any" }, "id": "901207e7-2d1b-4e5d-bb32-42508e383417", "name": "IF Check count (gas all)", "type": "n8n-nodes-base.if", "typeVersion": 1, "position": [ -1520, -240 ] }, { "parameters": { "operation": "executeQuery", "query": "=INSERT INTO 'smarthome_gas_consumption_all' VALUES('{{$node[\"Webhook smartmeter\"].json[\"body\"][\"gas_ts\"]}}',to_timezone(now(), 'Europe/Amsterdam'),{{$node[\"Webhook smartmeter\"].json[\"body\"][\"gas_totalUse\"]}});", "additionalFields": {} }, "id": "4fefa83d-f19b-4b15-8ca8-4167fc419f4f", "name": "QuestDB insert gas consumption all", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1300, -240 ], "notesInFlow": true, "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } }, "notes": "Insert values into QuestDB" }, { "parameters": { "operation": "executeQuery", "query": "=SELECT count() FROM (SELECT * FROM 'smarthome_gas_consumption_historical' ORDER BY addedTs DESC LIMIT 1)\n WHERE gasTs > dateadd('h', -1, to_timezone(now(), 'Europe/Amsterdam')) OR gas_totalUse = CAST({{$node[\"Webhook smartmeter\"].json[\"body\"][\"gas_totalUse\"]}} AS FLOAT);", "additionalFields": {} }, "id": "347852b5-5f2c-4b94-9af2-b98b7be4c328", "name": "QuestDB get count of records of last hour (gas historical)", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1740, 120 ], "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } } }, { "parameters": { "conditions": { "string": [ { "value1": "={{ $json[\"count\"] }}", "value2": "0" } ] }, "combineOperation": "any" }, "id": "5fd0fdf2-a708-45fa-9824-54bc043a1d6b", "name": "IF Check count (gas historical)", "type": "n8n-nodes-base.if", "typeVersion": 1, "position": [ -1520, 120 ] }, { "parameters": { "operation": "executeQuery", "query": "=INSERT INTO 'smarthome_gas_consumption_historical' VALUES('{{$node[\"Webhook smartmeter\"].json[\"body\"][\"gas_ts\"]}}',to_timezone(now(), 'Europe/Amsterdam'),{{$node[\"Webhook smartmeter\"].json[\"body\"][\"gas_totalUse\"]}});", "additionalFields": {} }, "id": "4ea5011c-96c2-45f6-b5fc-a5581bf26dad", "name": "QuestDB insert gas consumption historical", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -1300, 120 ], "notesInFlow": true, "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } }, "notes": "Insert values into QuestDB" }, { "parameters": { "triggerTimes": { "item": [ { "hour": 9 } ] } }, "id": "1f25ebd5-31e9-4dfc-8ed9-f6d219f3f643", "name": "Cron", "type": "n8n-nodes-base.cron", "typeVersion": 1, "position": [ -2240, 1320 ], "notesInFlow": true, "notes": "Trigger every day at 9:00 AM" }, { "parameters": { "content": "Data retention", "height": 80, "width": 150 }, "id": "fc640953-5ce0-470d-9b9c-67976ccd8432", "name": "Note9", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -2260, 1220 ] }, { "parameters": { "operation": "executeQuery", "query": "ALTER TABLE 'smarthome_power_consumption' DROP PARTITION WHERE powerTs < dateadd('d', -30, to_timezone(now(), 'Europe/Amsterdam'));", "additionalFields": {} }, "id": "302c9359-db0b-4e3d-aac0-2928e8e28b14", "name": "QuestDB data retention power consumption", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -2000, 1320 ], "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } } }, { "parameters": { "operation": "executeQuery", "query": "ALTER TABLE 'smarthome_gas_consumption_all' DROP PARTITION WHERE gasTs < dateadd('d', -30, to_timezone(now(), 'Europe/Amsterdam'));", "additionalFields": {} }, "id": "4b373d8a-0751-4624-973b-2c9e51b0f2df", "name": "QuestDB data retention gas consumption all", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -2000, 1500 ], "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } } }, { "parameters": { "operation": "executeQuery", "query": "ALTER TABLE 'smarthome_energy_consumption' DROP PARTITION WHERE powerTs < dateadd('y', -5, to_timezone(now(), 'Europe/Amsterdam'));", "additionalFields": {} }, "id": "9ce85e9c-6041-4dda-b0b5-02ba05584885", "name": "QuestDB data retention energy consumption", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -2000, 1680 ], "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } } }, { "parameters": { "operation": "executeQuery", "query": "ALTER TABLE 'smarthome_gas_consumption_historical' DROP PARTITION WHERE gasTs < dateadd('y', -5, to_timezone(now(), 'Europe/Amsterdam'));", "additionalFields": {} }, "id": "a24058a2-ecc8-4a17-a5d5-27accf5312dd", "name": "QuestDB data retention gas consumption historical", "type": "n8n-nodes-base.questDb", "typeVersion": 1, "position": [ -2000, 1860 ], "credentials": { "questDb": { "id": "1", "name": "QuestDB account" } } }, { "parameters": { "content": "Create the tables", "height": 80, "width": 160 }, "id": "d836dbc4-105c-44dd-ba53-a3b55419e692", "name": "Note10", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -2420, -500 ] }, { "parameters": { "content": "Receive data from Smartmeter2mqtt (https://github.com/svrooij/smartmeter2mqtt)", "height": 100, "width": 200 }, "id": "a71b14c3-12f3-4311-ac50-0bd6fcab2160", "name": "Note11", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -2280, 240 ] }, { "parameters": { "content": "Only save one reading every hour", "height": 80, "width": 150 }, "id": "c450a4a2-dcc0-43e7-b381-570fe99aa215", "name": "Note8", "type": "n8n-nodes-base.stickyNote", "typeVersion": 1, "position": [ -1760, 300 ] } ], "pinData": {}, "connections": { "Start": { "main": [ [ { "node": "QuestDB create table power consumption", "type": "main", "index": 0 } ] ] }, "Webhook smartmeter": { "main": [ [ { "node": "IF Check JSON", "type": "main", "index": 0 } ] ] }, "QuestDB create tables energy consumption": { "main": [ [ { "node": "QuestDB create table gas consumption historical", "type": "main", "index": 0 } ] ] }, "QuestDB create table power consumption": { "main": [ [ { "node": "QuestDB create tables energy consumption", "type": "main", "index": 0 } ] ] }, "IF Check JSON": { "main": [ [ { "node": "QuestDB insert power consumption", "type": "main", "index": 0 }, { "node": "QuestDB get count of records of last hour (gas historical)", "type": "main", "index": 0 }, { "node": "QuestDB get count of records of last hour (energy)", "type": "main", "index": 0 }, { "node": "QuestDB get count of duplicate records", "type": "main", "index": 0 } ], [ { "node": "NoOp2", "type": "main", "index": 0 } ] ] }, "IF Check count (energy)": { "main": [ [ { "node": "QuestDB insert energy consumption", "type": "main", "index": 0 } ], [ { "node": "NoOp", "type": "main", "index": 0 } ] ] }, "QuestDB get count of records of last hour (energy)": { "main": [ [ { "node": "IF Check count (energy)", "type": "main", "index": 0 } ] ] }, "QuestDB get count of duplicate records": { "main": [ [ { "node": "IF Check count (gas all)", "type": "main", "index": 0 } ] ] }, "QuestDB create table gas consumption historical": { "main": [ [ { "node": "QuestDB create table gas consumption all", "type": "main", "index": 0 } ] ] }, "IF Check count (gas all)": { "main": [ [ { "node": "QuestDB insert gas consumption all", "type": "main", "index": 0 } ], [ { "node": "NoOp3", "type": "main", "index": 0 } ] ] }, "QuestDB get count of records of last hour (gas historical)": { "main": [ [ { "node": "IF Check count (gas historical)", "type": "main", "index": 0 } ] ] }, "IF Check count (gas historical)": { "main": [ [ { "node": "QuestDB insert gas consumption historical", "type": "main", "index": 0 } ], [ { "node": "NoOp1", "type": "main", "index": 0 } ] ] }, "Cron": { "main": [ [ { "node": "QuestDB data retention power consumption", "type": "main", "index": 0 }, { "node": "QuestDB data retention gas consumption all", "type": "main", "index": 0 }, { "node": "QuestDB data retention energy consumption", "type": "main", "index": 0 }, { "node": "QuestDB data retention gas consumption historical", "type": "main", "index": 0 } ] ] } }, "active": false, "settings": { "saveDataSuccessExecution": "none", "executionTimeout": -1 }, "id": 2, "meta": { "instanceId": "4b59d62ed1e26e1a9992d44753b0b9e681ccb237a9a78b54fc65072af5f29162" }, "tags": [ ]}

No comments found for this note.

Join the discussion for this note on Github. Comments appear on this page instantly.