Serge LLaMA AI chat Windows Podman container setup

Introduction

Serge is a chat interface built with llama.cpp for running Alpaca models. Its fully dockerized nature made it the perfect choice for me, and the software’s reliability further convinced me of its utility.

I opted to run Serge using WSL2 and Podman on Windows, appreciating how straightforward the installation and usage process is with this setup.

My inspiration came from this article, which details using WSL2 and Docker with Serge. However, I aimed to simplify things further by using Podman, which offers several benefits:

- The Podman installation automatically installs WSL2

- Podman uses a minimal Fedora installation (no need for Ubuntu)

- No need for git installation or git clone

- Docker Desktop is not required (although Podman Desktop is available—untested!)

How To

Install Podman and WSL2

- Podman is the open-source more secure and lightweight alternative for Docker. I have largely followed this tutorial

- WSL2 is the Windows Subsystem for Linux and lets users run a GNU/Linux environment directly on Windows. It sounds very difficult but it’s easy to install!

Follow the steps below:

-

Go to the Podman releases page, scroll down to the

Assetsof the latest version and download the installation filepodman-<version>-setup.exe -

Start the installation and make sure

Install WSL if not presentis checked.- Click

Install - Let the computer restart after the installation

- After the restart the installation will be finished

Now configure WSL2 to have enough memory available for Serge. I have adjusted this within the global config.

- Click

-

In the Windows Explorer go to

%UserProfile%and create the file.wslconfigand add the following to the file:.wslconfig # Settings apply across all Linux distros running on WSL 2[wsl2]# Limits VM memory to use no more than 4 GB, this can be set as whole numbers using GB or MBmemory=20GB# Optional: Sets the VM to use two virtual processors# processors=2# Optional: Sets amount of swap storage space to 8GB, default is 25% of available RAM# swap=8GB# IMPORTANT: Please read the instructions belowInstructions:

- Required Replace

20GBwith the maximum amount of memory you want Serge to use. Here you can find more information about memory usage

- Required Replace

-

Start

Windows PowerShell(you can find this app by searching in the start menu) -

Apply the new config by restarting WSL2:

# Open the command promptwsl --shutdown -

Run the following commands to initialize and start the virtual machine with Podman:

# Open the command promptpodman machine initpodman machine start

Install Serge

-

Choose the location for Serge (in my case

c:\podman\serge) and create the folders for the weights and data:# Open the command promptmkdir c:\podman\serge\weightsmkdir c:\podman\serge\datadb -

Create the batch file which will create the Serge container:

# Open the command promptnotepad c:\podman\serge\podman-run-serge.bat# And choose `yes` to create the file -

Paste the following to Notepad:

podman-run-serge.bat podman run -d --name=serge --hostname=serge -p 8008:8008 -v c:\podman\serge\weights:/usr/src/app/weights -v c:\podman\serge\datadb:/data/db/ --restart unless-stopped ghcr.io/serge-chat/serge:main# IMPORTANT: Please read the instructions belowInstructions:

- Required Replace

c:\podman\sergeto the location of Serge if needed

- Required Replace

-

Close Notepad and save the changes

-

Run the batch file to create the Serge container and check if the container is running with the following command:

# Open the command promptpodman ps -a

Check the results

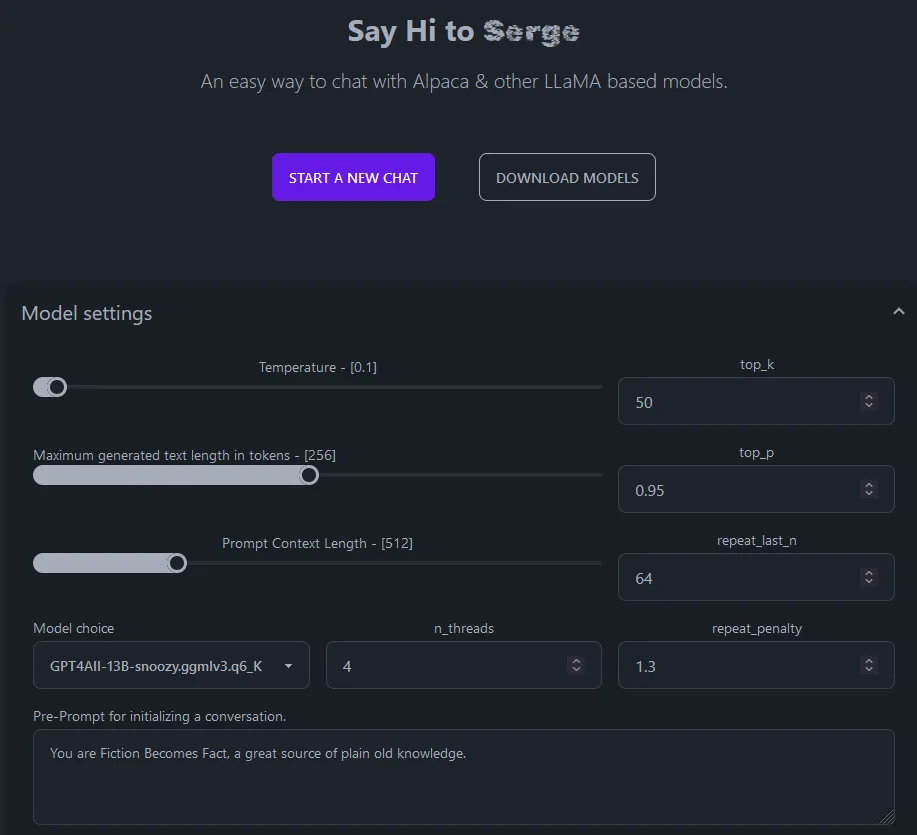

Now you can browse to Serge by opening a web browser and going to: http://127.0.0.1:8008.

Replace localhost with the relevant IP address or FQDN if needed, and adjust the port if you changed it.

You can find the downloaded models in the weights folder, for example c:\podman\serge\weights. There you can also place manually downloaded models.

Due to hardware limits, I personally use 7B or 13B models that require less than 20GB of memory. The chatting is still not fast (minutes waiting), but it is fun to experiment with!

No comments found for this note.

Join the discussion for this note on Github. Comments appear on this page instantly.